Reporting quality of evidence synthesis in human immunodeficiency virus (HIV): Examining articles that guide treatment recommendations

Purpose: Evidence synthesis can be helpful in the development of evidence-based guidelines. Examples include article types such as systematic reviews and meta-analyses. However, just because evidence synthesis is included in guidelines, doesn’t mean that article is of good quality. We aim to assess reporting quality, one dimension of an article’s overall quality, using the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA). We will determine if evidence synthesis citations in the Department of Health and Human Services human immunodeficiency virus guidelines follow trends for poor reporting quality or if they constitute a benchmark for the best evidence available in HIV.

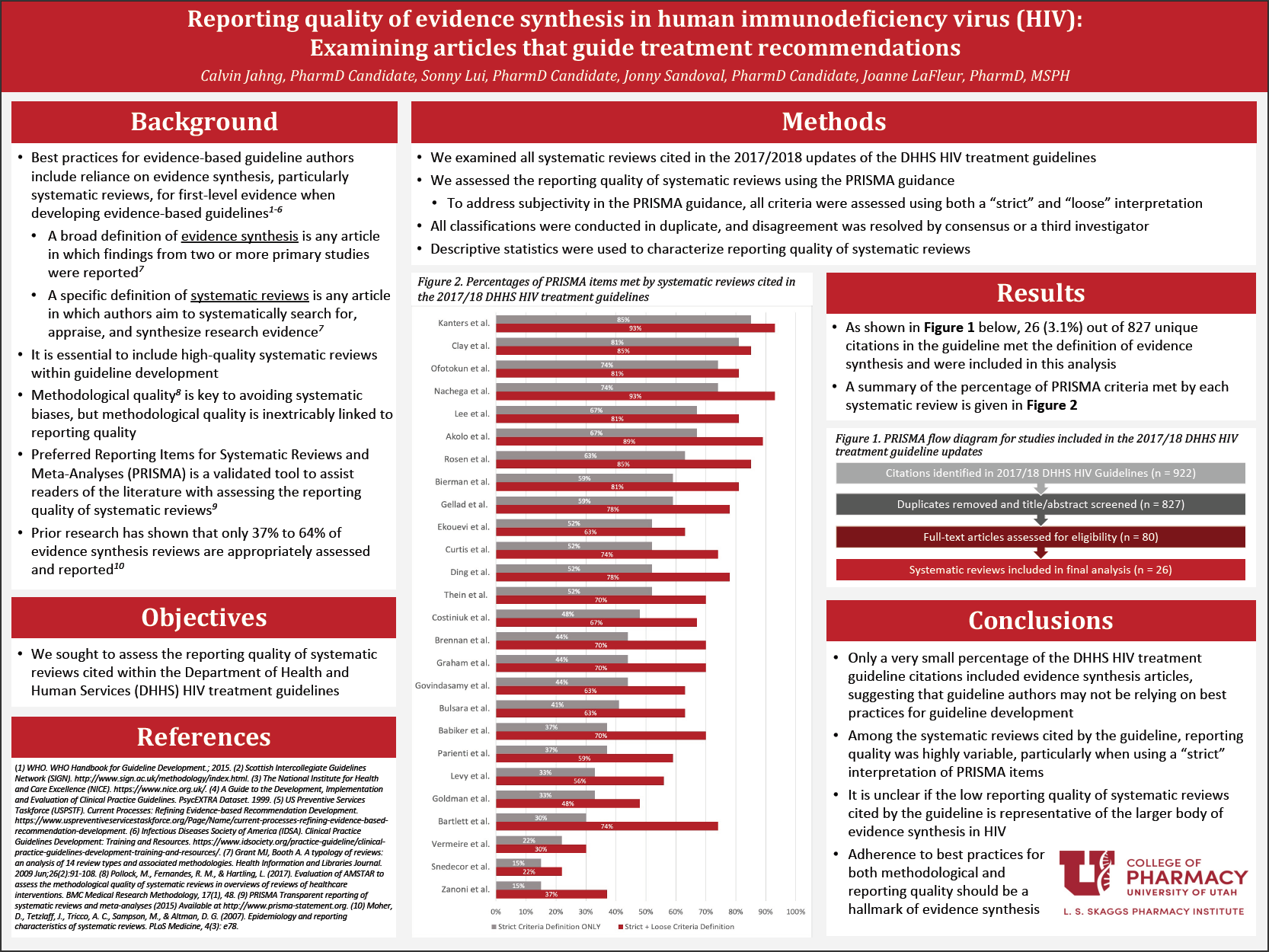

Methods: Pubmed citations for all articles cited in the 2017 and 2018 Department of Health and Human Services (DHHS) human immune deficiency virus (HIV) treatment guidelines were entered into EndNote and duplicate citations were removed. 2 study investigators independently classified each citation as evidence synthesis or not using the title and/or abstract, defining evidence synthesis as “any article in which the results of 2 or more primary studies were combined.” A third investigator resolved disagreement. For articles in which the reviewers could not make the classification using title/abstract only, the full text was reviewed. Articles that did not fit the study definition of evidence synthesis were excluded. A data collection form consisting of PRISMA criteria was created within the Research Electronic Data Capture (REDCap) and used by 2 investigators to independently perform a full-text review of the articles that met inclusion criteria. Disagreement was resolved by consensus/a third party. Data analyses consisted of frequencies, percentages, means, and standard deviations with confidence intervals calculated appropriately.

Results: A total of 26 articles were included in the final analysis. No article included 100% of the items developed by PRISMA, with a range of 15% to 85% in regard to the strict criteria only. When looking at strict and loose interpretations of the criteria combined together, the range became 22% to 93%. When each individual PRISMA item was considered, the top three best performing items included topics regarding justifying the reasoning for conducting the systematic review, authors’ reporting of pooled results, and describing findings in the context of other research. The bottom three performing items included topics regarding describing authors’ methods of assessing study-level risk of bias, assessment of review-level risk of bias, and reference to a review protocol.

Conclusion: Within our corpus of systematic reviews and meta-analyses, reporting quality based on established PRISMA criteria varies greatly, with some articles reporting as high as 85% and as low as 15% of total items. It is evident that, although these types of studies are considered the highest possible level of evidence, it may not necessarily mean that they are of good quality. Critical appraisal is still a necessary element in determining if a study should be used as a recommendation reference. Until more guidance is developed, readers should be aware that a rigorous evaluation of an article is still a necessary practice.

Published in College of Pharmacy, Virtual Poster Session Spring 2020

Jonny, Calvin, Sonny: This was a very enlightening piece of research. Somewhat appalling that more than 50 % was considered not quality per the standard guidelines used.

Thank you Jane!

Calvin, nicely presented poster. I am curious whether you think these findings would likely generalize to studies cited for guidelines for other diseases or if you think there’s something particular about the HIV guidelines? Also, do you think, given this significant limitation, that there are likely errors in guidelines that could affect patient outcomes?

Dr. Keefe,

Those are some great questions. I’d like to be optimistic about guidelines for other disease states, however, it concerns me that this set of guidelines came from the Department of Health and Human Services and they did not appear to critically evaluate the studies that they included (or at least comment on the quality of the studies). I’m not sure if there is something specific about HIV that would make it particularly vulnerable to this kind of poor reporting quality, as the PRISMA criteria/items are general and should apply to all systematic reviews and meta-analyses. I do recognize that in a set of guidelines as long and comprehensive as the DHHS HIV guidelines, it would take a very long time to appraise every citation that they used.

As for the errors in guidelines, it does make me more sensitive to this type of poor reporting quality and motivates me to be more careful about just taking the guideline’s word for it. I think it highlights the importance of reviewing 2-3 other sources, including primary literature, to back up what we see in the guidelines before just recommending what they say.

Calvin – I like your work. I wonder if there have been any studies looking at the relationship of reporting quality and quality of systematic review work. Can you share me any insights? If there is none, what tools you think should be used to assess the quality of systematic reviews and why?

Dr. Chaiyakunparuk,

Thank you! I appreciate your comment. That is a great question. I know there are a few studies that investigate both reporting quality and the methodological quality, which sounds like what you are referring to if I understand correctly. Interestingly, Jonathan Sandoval’s project looks into ROBIS, a tool to assess methodological quality, and Dr. LaFleur has been on a project that looks into AMSTAR, both with this set of HIV guidelines. We are planning on looking at the results of each of these studies together to get a better idea of how both types of quality are related. This is especially important because authors may have conducted their study rigorously, but without reporting it well throughout their manuscript, it would be difficult to see just how high-quality it really is. This is an area of future work that we hope to continue on.

Nicely done, Calvin. What was one thing you learned while working on this project that you think you’ll apply in your future career?

Dr. Witt,

Thank you! I think one thing that I plan on implementing in my future career is to always be conscious of what I am recommending based on guidelines. I think it is important to consider gathering 2-3 resources and include primary literature when asked for a recommendation. It becomes very easy to read something on guidelines and trust what they say, but we aren’t always completely aware of the quality of studies the authors use for their citations. A critical appraisal of studies should be a good practice that we implement during clinical practice.

Good job Calvin. Very important conclusion.

Thank you Dr. Barrows!

This is a great project. Another future direction of this project is to look at other meta-analyses that looked at this question in other disease states. I think you will find it is appalling often times the level of evidence included.

Dr. Tyler,

Thank you! I appreciate your comment. I agree, it’s made me much more aware of the variable quality that exists out there and more sensitive to the need for critical appraisal of studies. I think it would be very interesting to see what other research has found in other disease states.